Apache Kafka is popular for building real-time data pipelines often used alongside Python applications. Whether you’re a beginner or an experienced user, our guide will make installing Kafka on your Ubuntu machine a breeze. Let’s get started without any further ado.

What is Apache Kafka?

Apache Kafka is an open-source platform for processing real-time streaming data from various sources. It uses a publish-subscribe model to handle high-volume data streams efficiently.

With features like partitioning, replication, and fault tolerance, Kafka excels at high-throughput message processing in finance, manufacturing, and healthcare industries. Everyday use cases include tracking website activity, reporting metrics, log aggregation, and stream processing.

Kafka reliably stores event streams, processes them in real-time, and offers scalability and security. Deployable across environments, it utilizes a distributed messaging system over TCP for data transmission. Let's quickly look at how to install Apache Kafka on Ubuntu.

Prerequisites

- An Ubuntu server with a minimum of 5GB of RAM. If your system has less than 4GB of RAM, it could cause the Kafka service to fail. So, I recommend setting up a server with at least that amount of RAM, with some extra for breathing space and buffer. ( Webdock's SSD Bit is very affordable!)

Installing OpenJDK

OpenJDK is an open-source implementation of the Java Platform, Standard Edition. It provides tools and libraries to develop Java applications for free. To check if Java is pre-installed:

$ java -version -y

In most cases, it won't be preinstalled. So we'll have to install it and get started. Start by updating the package index:

$ sudo apt update -y

Then, proceed to install it:

$ sudo apt install openjdk-21-jre -y

Then recheck the version to verify the installation.

To compile and run certain Java programs, you might require both the Java Development Kit (JDK) and the Java Runtime Environment (JRE). To get the JDK, simply run the command provided; this will also set up the JRE for you:

$ sudo apt-get install openjdk-21-jdk -y

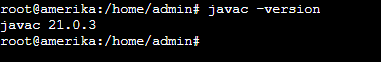

To confirm the installation of JDK, you need to check the version of javac, which is the Java compiler:

$ javac -version

Installing Kafka

To start using Kafka for network requests, the initial task involves setting up a specific user for the service. This helps to reduce potential harm to your Ubuntu system if the Kafka server gets compromised. You must log in to your server as a sudo user and create a user. I will name the user ‘Franz.’ 😉

$ sudo adduser franz

Answer the questions it asks and set a password. Remember the password, as we'll need it in the upcoming steps.

To grant the franz user the necessary privileges for installing Kafka’s dependencies, you should add the user to the sudo group using the adduser command:

$ sudo adduser franz sudo

And then log in:

$ su -l franz

Once you have set up a user for Kafka, the next step is to download and unpack the Kafka binaries. First, make a folder named Core Files in /home/kafka to keep your downloaded files organized:

$ mkdir ~/core-files

Then, get a copy of the Kafka binaries using curl:

$ curl "https://archive.apache.org/dist/kafka/3.7.0/kafka-3.7.0-src.tgz" -o ~/core-files/kafka.tgz

This command downloads the Apache Kafka source code version 3.7.0 as a compressed file named kafka.tgz to the Downloads file. At the time of writing this guide, the latest stable version is 3.7.0. However, you may have to adjust the versions in the future. You can keep track of Apache Kafka's repository.

First, create a directory named kafka and then navigate into this folder. This will serve as the main directory for your Kafka setup:

$ mkdir ~/kafka && cd ~/kafka

And then unzip the downloaded file. Since it is compressed using the TAR methodology, I'll use the same to unzip it:

$ tar -xvzf ~/core-files/kafka.tgz --strip 1

Notice the –strip 1 flag? That's to ensure the archive's contents are extracted directly into your ~/kafka/ directory, not a subdirectory.

After downloading and extracting the binaries, you can start configuring your Kafka server.

A Kafka topic is the name given to the category or similar feats where messages can be published. By default, Kafka does not allow the deletion of topics. To change this, you need to adjust the configuration file:

$ sudo nano ~/kafka/config/server.properties

Start by adding a configuration that lets you remove Kafka topics. Insert this line at the end of the file:

delete.topic.enable = true

And then, update the log.dirs property. Locate the log.dirs property, approximately on the 46th line, and swap out the current path with the new highlighted path:

log.dirs=/home/franz/kafka/kafka-logs

Save and close the file by pressing CTRL + X and then Y.

Systemd unit files on Ubuntu let you set up how systemd handles services, sockets, devices, and other parts of the system. These files outline the actions and dependencies required to start up the system. We'll need a similar systemd file for Kafka, which will assist you in executing typical service actions like initiating, halting, and rebooting Kafka following the standard procedures for Linux services.

Kafka relies on Zookeeper to handle the cluster’s state and settings. So, I’ll start by creating a unit file:

$ sudo nano /etc/systemd/system/zookeeper.service

With the said content:

[Unit] Requires=network.target remote-fs.target After=network.target remote-fs.target [Service] Type=simple User=franz ExecStart=/home/franz/kafka/bin/zookeeper-server-start.sh /home/franz/kafka/config/zookeeper.properties ExecStop=/home/franz/kafka/bin/zookeeper-server-stop.sh Restart=on-abnormal [Install] WantedBy=multi-user.target

Save and exit the file once added.

The [Unit] section indicates that Zookeeper needs both the network and file system to be up and running before it can begin operating.

In the [Service] section, it clarifies that systemd will use the zookeeper-server-start.sh and zookeeper-server-stop.sh scripts to manage to start and stop the service. It also notes that Zookeeper should be automatically restarted if it crashes unexpectedly.

Let's set up the systemd service file for Kafka:

$ sudo nano /etc/systemd/system/kafka.service

By adding the following content:

[Unit] Requires=zookeeper.service After=zookeeper.service [Service] Type=simple User=franz ExecStart=/bin/sh -c '/home/franz/kafka/bin/kafka-server-start.sh /home/franz/kafka/config/server.properties > /home/franz/kafka/kafka.log 2>&1' ExecStop=/home/franz/kafka/bin/kafka-server-stop.sh Restart=on-abnormal [Install] WantedBy=multi-user.target

Save and exit the file.

In the [Unit] section, you indicate that this unit file relies on zookeeper.service. This dependency ensures that zookeeper will start up automatically when the kafka service begins.

In the [Service] section, you tell systemd to use the kafka-server-start.sh and kafka-server-stop.sh scripts to handle starting and stopping the service. Additionally, you specify that Kafka should restart if it terminates unexpectedly.

Before we move forward, we would need to build a Java Archive (JAR) file with Gradle, or you may face exit-code=1 error:

$ ./gradlew jar -PscalaVersion=2.13.12

This command runs the Gradle wrapper script to build a JAR file using Scala version 2.13.12. It compiles the project’s source code and packages it into a JAR file. However, depending on your hardware, this process might take a significant time. It took me about 15 minutes to compile, so it might be a good time to get a quick stretch.

All set up and done? Let's start Kafka:

$ sudo systemctl start kafka

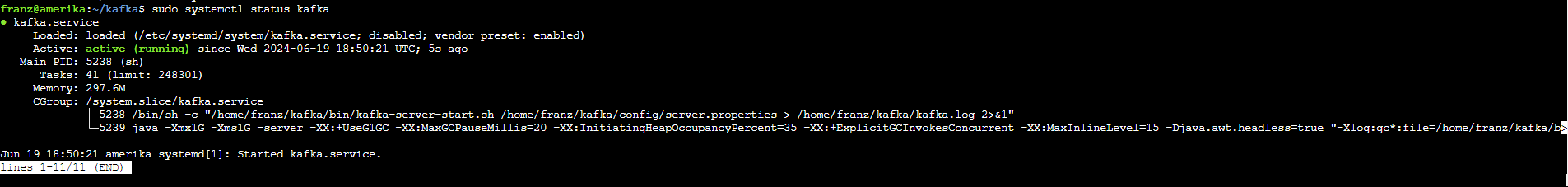

And check status to confirm it works well:

$ sudo systemctl status kafka

The status indicated that the Kafka server is now active on port 9092, the standard Kafka port. Although I have started the Kafka service, it won’t restart on its own if the server is rebooted. To set Kafka to start automatically after a reboot, use this command:

$ sudo systemctl enable zookeeper

Also run:

$ sudo systemctl enable kafka

Now, let's quickly check to see if everything works. Let's start by creating a topic. I'll call this TheMetamorphosis.

~/kafka/bin/kafka-topics.sh --create --topic TheMetamorphosis --partitions 1 --replication-factor 1 --bootstrap-server localhost:9092

This command would ensure that each message is copied once for fault tolerance and set up one partition to organize the data.

Now, let's echo a random quote from the acclaimed book:

$ echo "I only fear danger where I want to fear it." | ~/kafka/bin/kafka-console-producer.sh --broker-list localhost:9092 --topic TheMetamorphosis > /dev/null

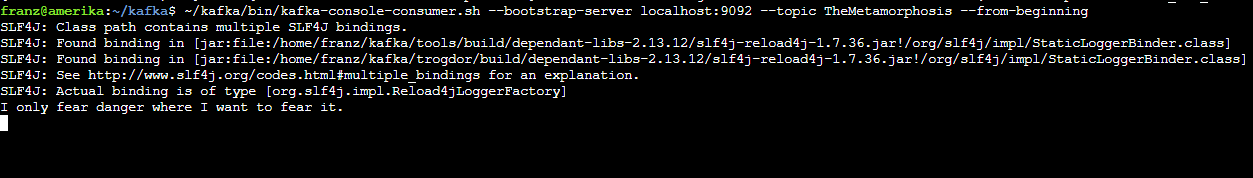

To create a Kafka consumer, use the kafka-console-consumer.sh script. You need to provide the ZooKeeper server's hostname, port, and topic name. The command below shows how to consume messages from the topic:

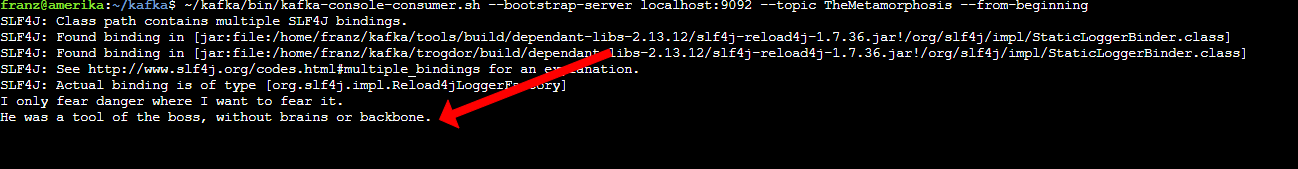

~/kafka/bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic TheMetamorphosis --from-beginning

I have included — from-beginning flag so I can read messages published before the consumer starts.

The output would be the quote I echoed after running the command:

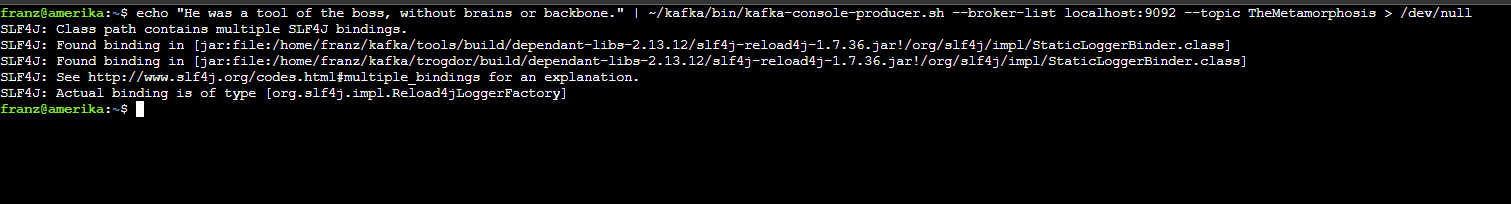

Let's test it from the consumer's side. Log in to a separate session/terminal as the Franz user and echo another message. I'll include another random quote from the book:

$ echo "He was a tool of the boss, without brains or backbone." | ~/kafka/bin/kafka-console-producer.sh --broker-list localhost:9092 --topic TheMetamorphosis > /dev/null

In the initial terminal, you'll see the message being relayed:

After you finish testing, hit CTRL+C to stop the consumer script in your main terminal.

The following steps can be considered optional. However, it might be a good idea to do them to enhance your server's security. Since the Franz user is somewhat "exposed," it would be a great idea to lock his login password and ensure the user can only log in using the sudo su command.

This way, even if the user’s password gets leaked, it would be very difficult for anyone to access the Kafka configuration and make a mess. Just run:

$ sudo passwd franz -l

After confirming, to log in to the franz account, you would need to run the following as an alternate sudo/root user:

$ sudo su - franz

You can revert this change, of course, by replacing the -l flag with the -u flag. It would also be a good idea to remove sudo privileges from Franz:

$ sudo deluser franz sudo

Conclusion

In conclusion, installing Apache Kafka on Ubuntu is a straightforward process that enables you to leverage its powerful real-time data streaming capabilities. By following the steps outlined in this article, you can set up Kafka, configure brokers and topics, and start publishing and consuming messages.

With Kafka up and running, you can unlock new possibilities for real-time application data processing and analysis.

Meet Aayush , a WordPress website designer with almost a decade of experience who crafts visually appealing websites and has a knack for writing engaging technology blogs. In his spare time, he enjoys illuminating the minds around him.